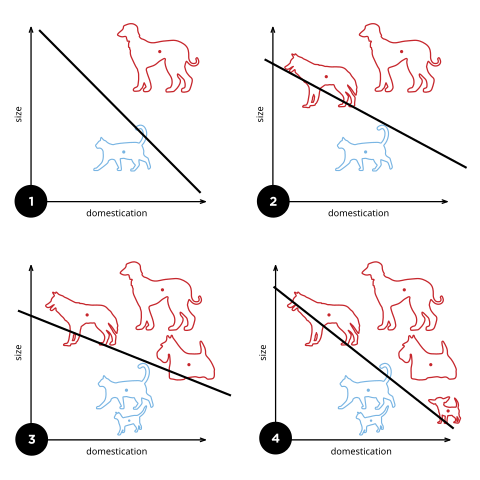

Artificial intelligence’s aims to model human intelligence in machines, enabling them to perform tasks that typically require human intelligence, such as visual perception, speech recognition, and decision-making.

For example, AI algorithms are capable of distinguish between cat and dog image sets, without having input rather than the image itself.

Leading technology companies, including Google, Amazon, Microsoft, and Apple, heavily invest in AI research, development, and deployment, recognizing its potential to shape the future.

AI-powered solutions are transforming industries, such as healthcare diagnostics, autonomous vehicles, personalized marketing, and predictive maintenance, AI models such as GPT-3 (Generative Pre-trained Transformer 3) and Chatbots like ChatGPT showcase the power and capabilities of AI in natural language processing, generating human-like responses and enabling conversational interactions.

The Perfect Duo: How GPUs and AI are Taking Over the World

Semiconductors are central in enabling AI, empowering machines to perform complex logical computations and analyze vast amounts of data. Specialized AI chips, such as graphics processing units (GPUs) and tensor processing units (TPUs), have been developed to optimize AI workloads.

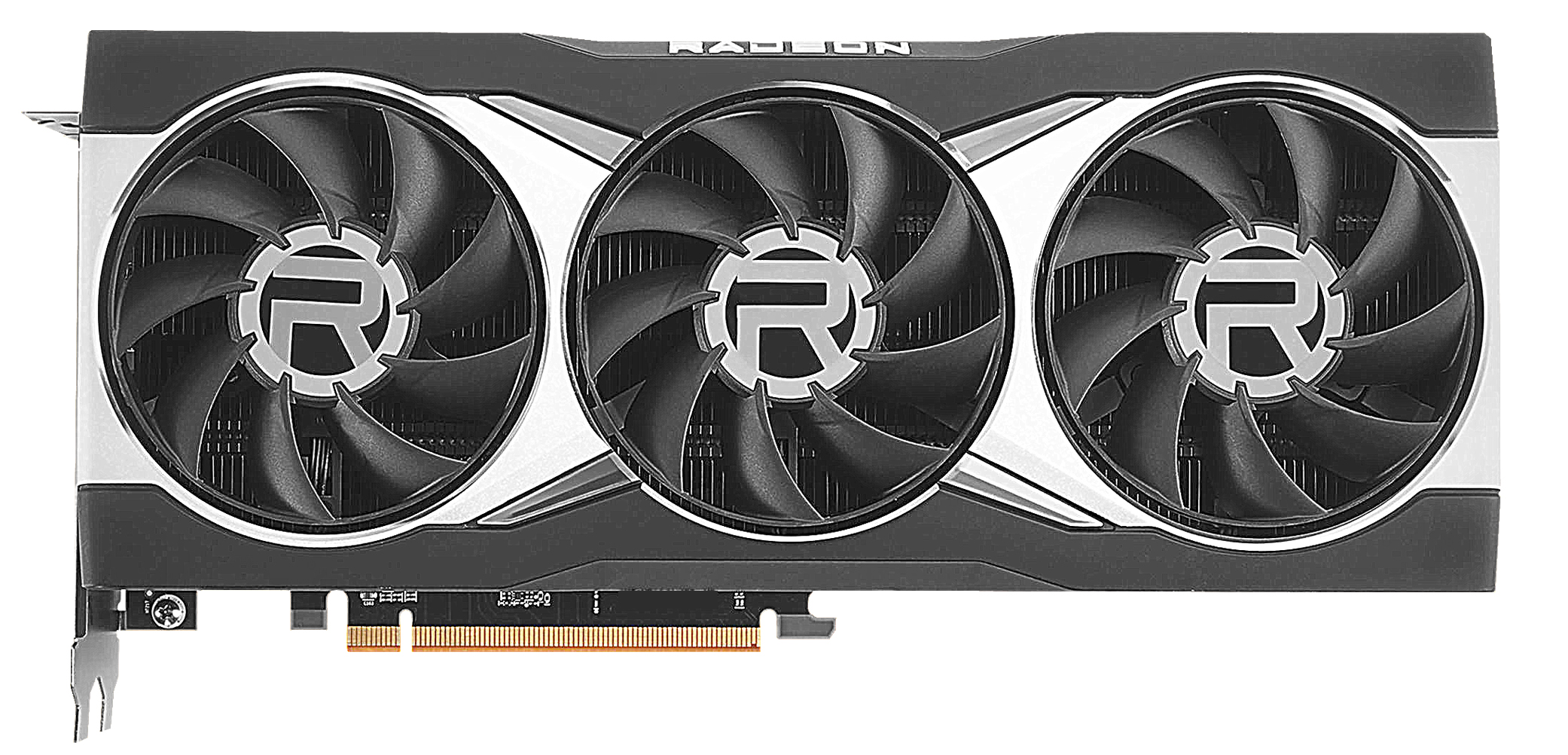

GPU (Graphics Processing Unit) technology refers to the specialized hardware designed for rendering and processing graphics, as well as performing parallel computations. Originally developed for handling graphics-intensive tasks like video games and computer-generated imagery (CGI), GPUs have evolved into powerful processors capable of accelerating a wide range of computationally intensive tasks.

Key characteristics of GPU technology

Parallel Processing: GPUs excel at parallel processing, allowing them to perform multiple calculations simultaneously. They feature a large number of cores or stream processors, which can execute tasks concurrently, leading to significant performance improvements compared to traditional central processing units (CPUs).

Graphics Rendering: GPUs are optimized for rendering high-quality graphics and images in real-time. They can handle complex tasks like geometry processing, texture mapping, shading, and rasterization, enabling smooth and realistic visuals in video games, virtual reality, and computer-aided design (CAD) applications.

Compute Power: Modern GPUs are not limited to graphics-related tasks alone. They are increasingly used for general-purpose computing, known as GPGPU (General-Purpose Computing on Graphics Processing Units). By leveraging their parallel processing capabilities, GPUs can accelerate various data-intensive computations, including scientific simulations, machine learning, deep learning, and data analytics.

API Support: GPUs support industry-standard APIs (Application Programming Interfaces) such as OpenGL, DirectX, and Vulkan. These APIs provide developers with the necessary tools and libraries to harness the power of GPUs for graphics rendering and general-purpose computing tasks.

Memory Bandwidth: GPUs typically have high memory bandwidth to efficiently handle large datasets and transfer data between the GPU and system memory. This enables quick access to textures, frame buffers, and other data required for graphics rendering and computational tasks.

Optimization for Power Efficiency: GPU manufacturers have made significant efforts to optimize power efficiency, allowing GPUs to deliver high performance while consuming relatively less power. This has led to the development of energy-efficient architectures and power management techniques.

Following events represent significant milestones in the development of GPU technology, showcasing the advancements made by NVIDIA and AMD in delivering more powerful and efficient graphics processing units for gaming, professional applications, and general-purpose computing:

1999 - NVIDIA GeForce 256: NVIDIA releases the first GPU, the GeForce 256, which introduces advanced 3D graphics rendering capabilities to consumer-grade computers.

2000 - ATI Radeon Series: ATI Technologies (now part of AMD) releases the Radeon series of GPUs, providing strong competition to NVIDIA in the graphics card market.

2006 - NVIDIA GeForce 8800 GTX: NVIDIA launches the GeForce 8800 GTX, a powerful GPU that introduces DirectX 10 support and sets new standards for gaming graphics.

2007 - CUDA Technology: NVIDIA introduces CUDA (Compute Unified Device Architecture), enabling developers to use GPUs for general-purpose computing, leading to accelerated scientific and computational applications.

2008 - AMD Radeon HD 4000 Series: AMD releases the Radeon HD 4000 series, featuring enhanced graphics capabilities and introducing technologies like GDDR5 memory and DirectX 10.1 support.

2012 - NVIDIA Kepler Architecture: NVIDIA introduces the Kepler architecture, known for its energy efficiency and improved performance, which becomes the foundation for subsequent GPU generations.

2015 - AMD Radeon R9 Fury X: AMD unveils the Radeon R9 Fury X, featuring high-bandwidth memory (HBM) and advanced cooling solutions, delivering improved performance and memory efficiency.

2016 - NVIDIA Pascal Architecture: NVIDIA launches the Pascal architecture, known for its significant performance gains and power efficiency, powering GPUs like the GeForce GTX 10 series.

2017 - AMD Radeon RX Vega: AMD releases the Radeon RX Vega series, featuring high-performance GPUs with advanced graphics and compute capabilities, targeting gaming and professional applications.

2020 - NVIDIA Ampere Architecture: NVIDIA introduces the Ampere architecture, powering GPUs like the GeForce RTX 30 series, offering improved ray tracing, AI acceleration, and performance over the previous generation.

2020 - AMD Radeon RX 6000 Series: AMD unveils the Radeon RX 6000 series, including the RX 6800, RX 6800 XT, and RX 6900 XT, delivering high-performance gaming GPUs with advanced features.

2021 - NVIDIA GeForce RTX 30 Series Refresh: NVIDIA introduces the GeForce RTX 30 series refresh, featuring enhanced versions of their Ampere-based GPUs with improved performance and memory configurations.

Lately, the NVIDIA Tesla V100 GPU outperforms TPU across different deep learning models. The chart showcases that for models like Inception v3, ResNet-50, and SSD, the GPU demonstrates a substantial advantage over the TPU. It achieves IPS values of around 4,000 to 8,000, while the TPU’s performance ranges from approximately 1,000 to 2,000 IPS. The performance comparison may vary depending on the GPU model, architecture, and optimization techniques.

Semiconductors, in particular US Companies like NVIDIA and AMD, have fueled a relentless march towards higher and more powerful AI models. The question is, who is going to dominate the new technological?

Stay tune to the next article on The Fight for Global Dominance in the Semiconductor Industry!